Posts filed under ‘Evaluation methodology’

New guide: Equitable Communications Guide for Evaluators

The Equitable Communications Guide is a great new resource for evaluators. Developed by Innovation Network, the Equitable Communications Guide is designed for evaluators in the social sector, but has relevant lessons for anyone looking to improve their communications! The guide explores how to communicate equitably, center the experiences of others, and convey the meaning behind key messages. Download the free guide here: https://innonet.org/news-insights/resources/equitable-communications-guide/

How to – outcome monitoring and harvesting

Often it’s useful in an evaluation during data collection to define or check what the given intervention sought to achieve in terms of outcomes – both intended and unintended. One approach that has become popular is outcome monitoring or harvesting. The World Bank has produced a very useful guide in this area that provides practical guidance – do take a look!

Nudge theory and evaluation

There has been a lot of talk about “nudge theory”- basically people being guided, encouraged and nudged towards the right decision, rather than being told – and now the evaluation unit of the UN agency, WIPO has produced a guide on how nudge theory can apply to evaluation – interesting reading!

Implications of COVID-19 on evaluation

The ILO have published useful guidelines on “Implications of COVID-19 on evaluations in the ILO: Practical tips on adapting to the situation“. The guidelines are well worth a read as they can provide guidance for many of us carrying out evaluations remotely these days.

CART principles for monitoring

Innovations for Poverty Action have developed some useful guidance on activity monitoring and evaluation based on their own CART principles: credible, actionable, responsible, and transportable (see summary graphic below).

Particularly useful for those interested in monitoring which is an issue many organisations find challenging. Read more here>>

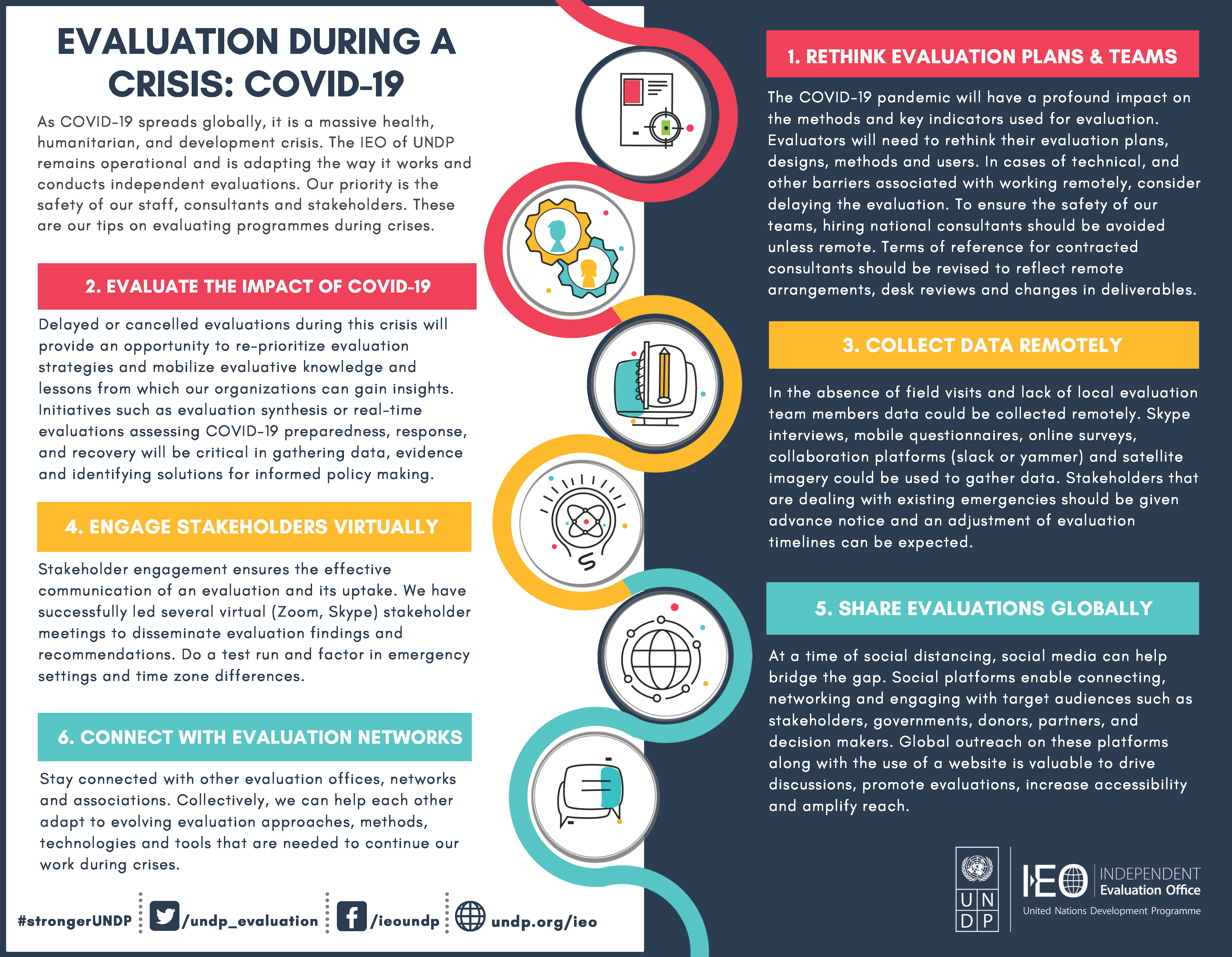

Evaluation during COVID19 – infographic

Here an informative infographic from UNDP independent evaluation office on evaluation during COVID19 (view the pdf version here).

Evaluation and COVID19

There have been many useful and interesting articles on how evaluation can adapt and cope with the current COVID19 pandemic. Here is a collection of what I’ve found to date:

Practical tools/advice:

Conducting phone-based surveys during COVID 2019

A quick primer on running online events and meetings

Covid-19 crisis: how to adapt your data collection for monitoring and accountability

Think pieces:

Zenda Ofir: Evaluation in times of COVID19 outbreak

World Bank: Conducting evaluations in times of COVID-19

Chris Lysy: The Evaluation Mindset: Evaluation in a Crisis

M Q Patton: Evaluation Implications of the Coronavirus Global Health Pandemic Emergency

New and better evaluation criteria

The OECD/DAC evaluation criteria – the main guidance used for most evaluations has been revised. Following a broad consultation, the revised criteria have been published (pdf).

The main changes are the addition of a new criterion: Coherence; a better explanation of how to use the criteria; and a recognition that the criteria are used across many sectors and not only for the development sector. The explanatory document (pdf) is well worth a read.

A framework for context analysis

Here is an interesting tool to help with context analysis: ‘Context Matters’ framework – to support evidence-informed policy making. The tool is interactive and you can view the different elements from various perspectives. Designed to support the use of knowledge in policy-making, it could also be of interest to researchers and evaluators as an analytical tool for contexts.

View the interactive framework here>>

Read more about the framework here>>

Thanks to Better Evaluation for introducing this new resource to me.

New e-learning course: Real-time evaluation and adaptive management

My friends at TRAASS have launched a new e-learning course on Real-time evaluation and adaptive management:

“What exactly is an RTE/AM approach and how can it help in unstable or conflict affected situations? Do M&E practitioners need to ditch their standard approaches in jumping on this latest bandwagon? What can you do if there is no counterfactual or dataset? This modular course covers these challenges and more.”