Posts filed under ‘PR evaluation’

Communications evaluation – 2009 trends

Last week I gave a presentation on evaluation for communicators (pdf) at the International Federation of Red Cross and Red Crescent Societies. A communicator asked me what trends had I seen in communications evaluation, particularly relevant to the non-profit sector. This got me thinking and here are some of the trends I have seen in 2008 that I believe are an indication of some directions in 2009:

Measuring web & social media: as websites and social media increasingly grow in importance for communication programmes, so to is the necessity to have the capacity to measure what their impact is. Web analytics has grown in importance as will the ability to measure social media.

Media monitoring not the be-all and end-all: after many years of organisations only focusing on media monitoring as the means of measuring communications, there is finally some realisation that media monitoring is an interesting gauge of visibility but not more. Organisations are now interested more and more in having some qualitative analysis of data collected (such as looking at how influential the media are, the tone and the importance).

Use of non-intrusive or natural data: organisations are also now considering “non-intrusive” or “natural” data – information that already exists – e.g. blog / video posts, customer comments, attendance records, conference papers, etc. As I’ve written about before, this data is underated by evaluators as everyone rushes to survey and interview people.

Belated arrival of results-based management: Despite existing for over 50 years, results-based management or management by objectives is just arriving in many organsations. What does this mean for communicators? It means that at the minimum they have to set measurable objectives for their activities – which is starting to happen. They have no more excuses(pdf) for not evaluating!

Glenn

New portal on communications evaluation

A new portal has been launched on evaluation communications – with plenty of interesting resources. The Communication Controlling portal is supported and updated by experts from Leipzig University and the “Value Creation through Communication” working group of the German Public Relations Association (Deutsche Public Relations Gesellschaft, DPRG). Visit the portal>>

PR Measurement – Catch 22

PR Week has recently published their Marketing Management Survey (pdf). Amongst other subjects, the survey asked US-based marketing excutives about measurement and their activities. Ed Moed of the Measuring Up blog points out that the survey “rehashes many of the same weary and misguided perceptions” on PR measurement, notably:

PR Week has recently published their Marketing Management Survey (pdf). Amongst other subjects, the survey asked US-based marketing excutives about measurement and their activities. Ed Moed of the Measuring Up blog points out that the survey “rehashes many of the same weary and misguided perceptions” on PR measurement, notably:

“PR is a difficult one to measure.”

“ Clients value PR, but question its value because they think it can’t be measured”

“In an economic climate where budgets are tight, research and measurement are very often the first portions of a PR budget to be cut. Yet measurement is necessary to prove ROI, which can help increase budgets, providing a Catch-22 situation…”

As Ed points out “People, public relations can be measured.” I couldn’t agree more, read Ed’s full post on his blog.

Glenn

Evaluating communication products

Organizations spend millions on communication products every year. Brochures, annual reports, corporate videos and promotional materials are produced and distributed to a wide variety of audiences as part of broader communication programmes or as “stand alone” products.

However, working with many different types of organizations, I’ve noticed that little systematic follow-up is undertaken to evaluate how these products are used and what is their contribution to achieving communication or organizational goals.

I recently worked on a project where did just that – we evaluated specific communication products and attempted to answer the following questions:

- Is the product considered to be of high quality in terms of design and content?

- Is the product targeted to the right audiences?

- Is the product available, accessible and distributed to the intended target audiences?

- Is the product used in the manner for which it was intended – and for what other unintended purposes?

- What has the product contributed to broader communication and organizational goals?

- What lessons can be learnt for improving future editions of the product and design, distribution and promotion in general?

The results were quite interesting and surprising. We were also able to map out the use of a given product, like in this example:

You can read more about this approach in this fact sheet (pdf) >>

Glenn

media evaluation – sentiment analysis

Monitoring of the media often involves assessing the “tone” of a media item – is it positive, negative or neutral on a given subject? This is often done manually and many of the large media monitoring services do this but it is a paid service not readily available to many.

Now there is a tool available to all to assess the “tone” of a media item (written):

This is what David Phillips of the LeverWealth blog had to say about it:

“This kind of development is useful for analysing sentiment of news articles, blogs and other content, which is its primary purpose but it also has applications in evaluating style and and bias all of which are very useful to the PR industry, regulators and watchers of political sentinemt on and off line.”

Glenn

Internal communications and measurement

For those interested in measuring internal communications (communicating with staff within an organisation), here is an interesting article by Susan Walker from the Handbook of Internal Communication:

“Measurement is not just an optional extra for communicators, but an essential part of their professional tool kit. It has been seen sometimes as a threat (will they cut my budget? will they cut me?) It can be, however, an exciting opportunity to evaluate, guide and direct communication initiatives and investment.”

Glenn

Event scorecard

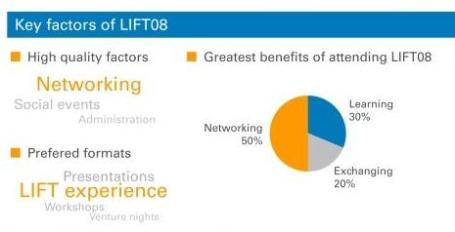

In the work I do to evaluate conferences and events, I have put together what I believe is a “neat” way of displaying the main results of an evaluation: an event scorecard. In the evaluation of a conference that occurs every year in Geneva, Switzerland, the LIFT conference, the scorecard summarises both qualitative and quantitative results taken from the survey of attendees. Above you can see a snapshot of the scorecard.

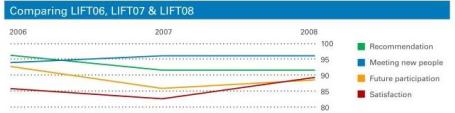

As I have evaluated the conference for three years now, we were also able to show some comparative data as you can see here:

If you are interested, you can view the full scorecard by clicking on the thumbnail image below:

And for the really keen, you can read the full evaluation report of the LIFT08 evaluation report (pdf).

Greetings from Tashkent, Uzbekistan from where I write this post. I’m here for an evaluation project and off to Bishkek, Kyrgyzstan now.

Glenn

Perceptions of evaluation

I’ve just spent a week in Armenia and Georgia (pictured above) for an evaluation project where I interviewed people from a cross section of society. These are both fascinating countries, if you ever get the chance to visit… During my work there, I was wondering – what do people think about evaluators? For this type of in-site evaluation, we show up, ask some questions – and leave – and they may never see us again.

From this experience and others I’ve tried to interpret how people see evaluators – and I believe people see us in multiple ways including:

The auditor: you are here to check and control how things are running. Your findings will mean drastic changes for the organisation. Many people see us in this light.

The fixer: you are here to listen to the problems and come up with solutions. You will be instrumental in changing the organisation.

The messenger: you are simply channelling what you hear back to your commissioning organisation. But this is an effective way to pass a message or an opinion to the organisation via a third party.

The researcher: you are interested in knowing what works and what doesn’t. You are looking at what causes what. This is for the greater science and not for anyone in particular.

The tourist: you are simply visiting on a “meet and greet” tour. People don’t really understanding why you are visiting and talking to them.

The teacher: you are here to tell people how to do things better. You listen and then tell them how they can improve.

We may have a clear idea of what we are trying to do as evaluators (e.g. to assess results of programmes and see how they can be improved), but we also have to be aware that people will see us in many different ways and from varied perspectives – which just makes the work more interesting….

Glenn

Evaluating advocacy campaigns – No. 2

I’ve written previously about work that others and myself have done on evaluating communication and advocacy campaigns, particulary concerning campaigns that aim for both changes in individual behaviour and government/private sector policies.

In this area, here is an interesting article from the Journal of Multidisciplinary Evaluation, “Advocacy Impact Evaluation” (pdf) by Michael Q. Patton. The article explains how an evaluation was undertaken to evaluate the impact of an advocacy campaign to influence a decision of the US Supreme Court.

What I find interesting is how the evaluation was done – what is called the “General Elimination Method”.

This is where there is an effect (the Supreme Court decision) and an intervention (the advocacy campaign) and they search for connections between the two. They tried to eliminate alternative or rival explanations until the most compelling explanation remained. They did this through interviews, analysis of news, documents and the Court’s decision. The article explains all of this and makes for interesting reading, you can read the article here (pdf).

Glenn

Hints on interviewing for evaluation projects

Evaluators often use interviews as a primary tool to collect information. Many guides and books exist on interviewing – but not so many for evaluation projects in particular. Here are some hints on interviewing based on my own experiences:

1. Be prepared: No matter how wide-ranging you would like an interview to be, you should as a minimum note down some subjects you would like to cover or particular questions to be answered. A little bit of structure will make the analysis easier.

2. Determine what is key for you to know: Before starting the interview, you might have a number of subjects to cover. It may be wise to determine what is key for you to know – what are the three to four things you would like to know from every person interviewed? Often you will get side-tracked during an interview and later on going through your notes you may discover that you forgot to ask about a key piece of information.

3. Explain the purpose: Before launching into questions, explain in broad terms the nature of the evaluation project and how the information from the discussion will be used.

4. Take notes as you discuss: Even if it is just the main points. Do not rely on your memory as after you have done several interviews you may mix up some of the responses. Once the interview has concluded try to write further on the main points raised. Of course, recording and then transcribing interviews is recommended but not always possible.

5. Take notes about other matters: It’s important also to note down not only what a person says but how they say it – you need to look out for body language, signs of frustration, enthusiasm, etc. Any points of this nature I would normally note down at the end of my interview notes. This is also important if someone else reads your notes in order for them to understand the context.

6. Don’t offer your own opinion or indicate a bias: Your main role is to gather information and you shouldn’t try to defend a project or enter into a debate with an interviewee. Remember, listening is key!

7. Have interviewees define terms: If someone says “I’n not happy with the situation”, you have understood that they are not happy but not much more. Have them define what they are not happy about. It’s the same if an interviewew says “we need more support”. Ask them to define what they mean by “support”.

8. Ask for clarification, details and examples: Such as “why is that so?”, “can you provide me with an example?”, “can you take me through the steps of that?” etc.

Hope these hints are of use..

Glenn