Webinar: Understanding and supporting networks: learning from theory and practice – May 5

Here is an interesting webinar online conference on “Understanding and supporting networks: learning from theory and practice”:

NGOs join them, researchers collaborate across them, civil society rallies around them, policy makers are influenced by them and donors are funding them. Networks are a day to day reality and an important mode of working for almost all of in the aid sector. They are increasingly being used as a vehicle for delivering different kinds of development interventions, from policy influencing and knowledge generation to changing practices on the ground. But how often do we pause and reflect on what it means to engage in a network or think about how networks work – and how they could work better?

This webinar will present two papers by the Overseas Development Institute that challenge the current ubiquity of networks and offer ideas and reflections for those facilitating networks. Ben Ramalingam will present his paper: Mind the Network Gaps in which he reviews the aid network literature and identfies theoretical lenses which could help advance thinking and practice.

Enrique Mendizabal and Simon Hearn will discuss a revised version of the Network Functions Approach and how it can be used to establish a clear mandate for a network; and hence avoid situations where networks are established without consideration of the costs.

Following the two presentations we will hear comments and discussion from two experts in the field; Rick Davies, an evaluation consultant and moderator of the mande.co.uk website, and Nancy White (www.fullcirc.com), a expert on communities of practice and online facilitation and author of the book: ‘Digital Habitats’.

More information and registration>>

Thanks to the On Think Tanks blog for bring this to our attention.

Ten takeaways on evaluating advocacy and policy change

The Harvard Family Research Project produce some excellent material on advocacy evaluation.

From their newsletter(pdf), here are ten takeways on evaluating advocacy and policy change:

1.Advocacy evaluation has become a burgeoning field.

2. Advocacy evaluation is particularly challenging when approached with a traditional program evaluation mindset.

3. The goals of advocacy and policy change efforts—that is, whether a policy or appropriation was achieved—typically are easy to measure.

4. Many funders’ interest in advocacy evaluation is driven by a desire to help advocates continuously improve their work, rather than to prove that advocacy is a worthy investment.

5. Advocates must often become their own evaluators. Because of their organizational size and available resources, evaluation for many advocates requires internal monitoring and tracking of key measures rather than external evaluation.

6. External evaluators can play critical roles.

7. Context is important.

8. Theories of change and logic models that help drive advocacy evaluation should be grounded in theories about the policy process.

9. Measures must mean something.

10. Evaluation creativity is important.

media attention & importance

Which media gets most our attention and is important to us? This was the question asked recently in a study(pdf) undertaken by the UK Ofcom (UK regulator/authority).

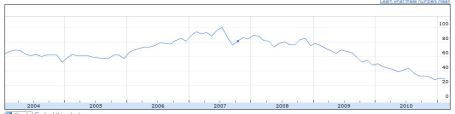

The graph above (click on it to see it better), taken from this report shows that the media that gets most of the attention and is important to us (based on UK audiences) is:

- mobile calls

- mobile texting/mobile video/print media

Those that get the least attention and are less important are:

- radio

- recorded TV programmes

Interestingly, social media (mobile) sits in the middle and TV gaming (e.g. Playstation, nintendo, Xbox) gets high attention but is of low importance (witness any teenager “glued” to these games and you know the score…).

So the death of email is still far off it seems…as is print media although we might have our doubts on both of these… and poor radio, it is a survivor!

It also indicates that most of the media that are important and grab our attention are interpersonal media – and not mass media – the only exception being the print media – which is a surprise. Then again, the “print” internet (news websites) is missing from the chart (as is social networking-internet) and I’d guess that would be situated close to print media.

Conference evaluation – at a glance

For those interested in evaluating conferences, events and seminars, the following presentation may be of interest to you.

With Laetitia Lienart of the International Aids Society, I presented an overview of conference evaluation for the Geneva Evaluation Network last Wednesday.

Measuring success in online communities – part 2

Further to my earlier post on measuring online communities, I had the opportunity last weekend to present a module on this subject to a group of students following the SAWI diploma on “Spécialiste en management de communautés & médias sociaux”.

The slides used for this presentation are found below – they are in French – English translation will come….soon!

Best practice guide for using statistics in communications

A new publication from the UK-based CIPR on using statistics in communications, key points covered include:

- Why statistics are used

- What statistical information should include in PR activities

- Analysis

- Reporting on survey methodology

- What common statistical terms mean – and pitfalls to watch out for

- Common pitfalls that can undermine your message

Employee engagement is cool. Employee surveys are not

Using Google Analytics to track the relative value of your Offer

Some lessons for the communications Evaluation profession

It is some time since I looked at my Google Analytics account. A pity, because it can reveal some dramatic insights into global trends. And the quality and mine-ability of the data is improving month by month.

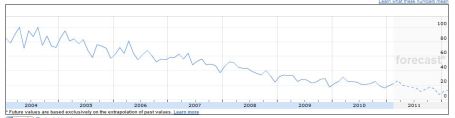

I wanted to see what was happening in Benchpoint’s main market place, which is specialist on line surveys of employee opinion in large companies. So I looked up “employee surveys”. I was surprised (and shocked) to see that Google searches for this had declined since their peak in 2004 to virtual insignificance.

This was worrying, because our experience is that the sector is alive and well, with growing competition.

On the whole, we advise against general employee surveys, preferring surveys which gain insight into specific areas.

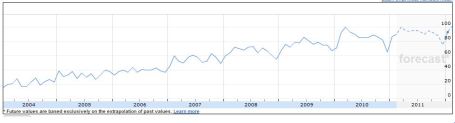

So I contrasted this with a search for “Employee Engagement”, on its own. The opposite trend! This search term has enjoyed steady growth, with the main interest coming from India, Singapore, South Africa, Malaysia, Canada and the USA, in that order.

“Employee engagement surveys”, which first appeared in Q1 2007, also shows a contrarian trend, with most interest coming from India, Canada, the UK and the USA.

Looking at the wider market, here is the chart for the search term “Surveys” – a steady decline since 2007

But contrast this with searches for “Survey Monkey”

Where is all this leading us? Google is remarkably good are recording what’s cool, and what’s not in great detail and in real time. There are plenty of geeks out there who earn good money doing it for the big international consumer companies. And what it tells us is that, more than ever, positioning is key.

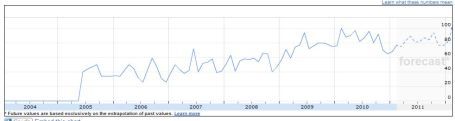

Our own field, “ Communications Evaluation” is fairly uncool. Maybe we need to invent a new sexy descriptor for what we do?

But note, on the chart below, the peaks in the autumn of 2009 and 2010, when the AMEC Measurement Summits were held. Sudden spikes in interest.

This blog and Benchpoint have the copyright of “Intelligent measurement”, which is holding its own in the visibility and coolness stake – with this blog giving a boost way back in 2007…

Conclusions:

- Get a Google Analytics account and start monitoring the keywords people are using to seach for your business activity and adapt your website accordingly

- As an interest group/profession, we probably need to adopt a different description of what we do if we wish to maintain visibility and influence. Suggestions anyone? Discuss!

Sorry for such a long post!

Richard

Latest Benchpoint survey on project management rewards, conditions and climate

Readers who are self employed, or who work in small consultancies my find this report from Benchpoint useful.

This is its fourth annual survey of the UK project management market, and there are similarities between this population and others working as self employed consultants in the communications and evaluation field.

The survey looks at rewards (salaried vs sell-employed), working conditions and the economic climate, as well as some issues specific to project managers.

Advocacy campaigns and policy influence

Influencing policy is often an aim of many advocacy campaigns – the notion of trying to bring about change in the policy of governments, the private sector or even international organisations (e.g. UN). Here are two interesting publications in this area:

“Pathways for change: 6 Theories about How Policy Change Happens (pdf)” of the US-based Organization Research Services describes different theories as to how policy change can occur – interesting reading for those trying to influence policy.

“A guide to monitoring and evaluating policy influence (pdf)” of the UK-based Overseas Development Institute describes the different approaches to evaluating policy influence – i.e. how you can evaluate your efforts to influence policy.

Both publications are worth a read if you are interested in policy influence and advocacy campaigning.

New Google group on conference evaluation

If you are interested in the evaluation of events and conference then I’ d suggest you join the new Google group on this subject:

http://groups.google.com/group/conference_evaluation?hl=en

Join the conversation!