1 day workshop in Bern: The politics of evaluation; 2 November 2019

Join a 1 day workshop in Bern, Switzerland on the Politics of Evaluation taught by Dr. Marlène Läubli Loud.

The course will look at why and how evaluation is in itself a political activity and consequently, how the «political» interests of the various partners involved can play an important part in influencing the evaluation process. We will look at aspects of political influence and practice how those can be managed to avoid conflicts of interest and minimise risks.

New resource: No Royal Road: Finding and Following the Natural Pathways in Advocacy Evaluation

Jim Coe and Rhonda Schlangen have published a very interesting publication on advocacy evaluation.

The highlight six factors that they believe should change for monitoring and evaluation of advocacy:

1. Better factor in uncertainty.

2. Plan for unpredictability.

3. Redefine contribution as combinational and dispositional.

4. Parse outcomes and their significance.

5. Break down barriers to engaging advocates in monitoring and evaluation.

6. Think differently about how we evaluate more transformational advocacy.

Sketches from communication evaluation summit

If you missed the AMEC Summit on communication evaluation in May 2019 as I did, there are some great summary sketches of the main sessions by Redanredan. See an example below; well worth to check out:

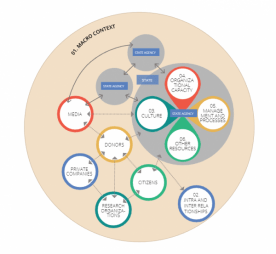

A framework for context analysis

Here is an interesting tool to help with context analysis: ‘Context Matters’ framework – to support evidence-informed policy making. The tool is interactive and you can view the different elements from various perspectives. Designed to support the use of knowledge in policy-making, it could also be of interest to researchers and evaluators as an analytical tool for contexts.

View the interactive framework here>>

Read more about the framework here>>

Thanks to Better Evaluation for introducing this new resource to me.

Using infographics to present evaluation findings

I’ve written previously about using infographics to summarise evaluation findings; here is another recent example of where my evaluation team used an infographic to present the findings of an evaluation – it’s only a partial view – you can see the complete infographic on page 5 of this report (pdf).

New e-learning course: Real-time evaluation and adaptive management

My friends at TRAASS have launched a new e-learning course on Real-time evaluation and adaptive management:

“What exactly is an RTE/AM approach and how can it help in unstable or conflict affected situations? Do M&E practitioners need to ditch their standard approaches in jumping on this latest bandwagon? What can you do if there is no counterfactual or dataset? This modular course covers these challenges and more.”

Humanitarian advocacy – an introduction

For those interested in the area of humanitarian work and advocacy, this presentation could be of interest – where I explain what is humanitarian advocacy – its definition, levels, process and challenges.

Originally presented at CERAH as part of their Masters in Humanitarian Action.

November is communication measurement month!

November is AMEC’s communication measurement month. There are some great events going on all over the world, check out the calendar of events >>

Example: mixed methods in evaluation

We often talk about using mixed methods in evaluation but we rarely see examples that go beyond a combination of surveys and interviews. So I wanted to share an example of an evaluation that I thought was a good example of using a variety of methods. I was part of a team (of the Independent Evaluation Office) that carried out an evaluation of knowledge management at the Global Environment Facility.

The methods we used included:

-Semi-structured interviews

-Online surveys

-Comparative study of four organisations

-Meta-analysis of country-level evaluations

-Citation analysis – qualitative and quantitative

The image shows the visualisation of the citation analysis (carried out by Matteo Borzoni) by theme – interesting stuff! I feel that the range of data collected gave us a very solid evidence base for the findings. The report is available publicly and can be viewed here (pdf)>>

Practical lessons on influencing policy

A recent issue of Policy and Politics journal has a special focus on influencing policy, mostly about the work of research and academia in this respect. There are many parallels to advocacy and policy influence work in general, with this particular lesson I found highly relevant:

A recent issue of Policy and Politics journal has a special focus on influencing policy, mostly about the work of research and academia in this respect. There are many parallels to advocacy and policy influence work in general, with this particular lesson I found highly relevant:

“Avoid relying too much only on evidence and analyses, instead combine evidence with framing strategies and storytelling”

The introduction chapter to the issue is free to access and can be viewed here>>