Posts filed under ‘Evaluation tools (surveys, interviews..)’

EU Manual: Evaluating Legislation and Non-Spending Interventions in the Area of Information Society and Media

A very interesting manual published by the European Union:

Despite the wordy title, the manual is really about how to evaluate the effects of legislation and initiatives taken by governments (in this case the regional body – EU).

The toolbox at page 72 is well worth a look.

Employee engagement is cool. Employee surveys are not

Using Google Analytics to track the relative value of your Offer

Some lessons for the communications Evaluation profession

It is some time since I looked at my Google Analytics account. A pity, because it can reveal some dramatic insights into global trends. And the quality and mine-ability of the data is improving month by month.

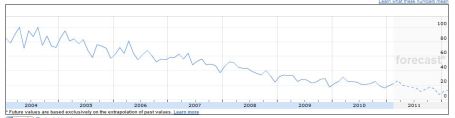

I wanted to see what was happening in Benchpoint’s main market place, which is specialist on line surveys of employee opinion in large companies. So I looked up “employee surveys”. I was surprised (and shocked) to see that Google searches for this had declined since their peak in 2004 to virtual insignificance.

This was worrying, because our experience is that the sector is alive and well, with growing competition.

On the whole, we advise against general employee surveys, preferring surveys which gain insight into specific areas.

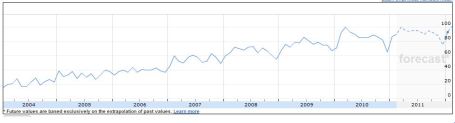

So I contrasted this with a search for “Employee Engagement”, on its own. The opposite trend! This search term has enjoyed steady growth, with the main interest coming from India, Singapore, South Africa, Malaysia, Canada and the USA, in that order.

“Employee engagement surveys”, which first appeared in Q1 2007, also shows a contrarian trend, with most interest coming from India, Canada, the UK and the USA.

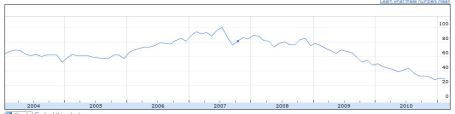

Looking at the wider market, here is the chart for the search term “Surveys” – a steady decline since 2007

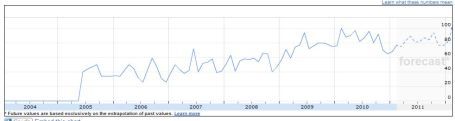

But contrast this with searches for “Survey Monkey”

Where is all this leading us? Google is remarkably good are recording what’s cool, and what’s not in great detail and in real time. There are plenty of geeks out there who earn good money doing it for the big international consumer companies. And what it tells us is that, more than ever, positioning is key.

Our own field, “ Communications Evaluation” is fairly uncool. Maybe we need to invent a new sexy descriptor for what we do?

But note, on the chart below, the peaks in the autumn of 2009 and 2010, when the AMEC Measurement Summits were held. Sudden spikes in interest.

This blog and Benchpoint have the copyright of “Intelligent measurement”, which is holding its own in the visibility and coolness stake – with this blog giving a boost way back in 2007…

Conclusions:

- Get a Google Analytics account and start monitoring the keywords people are using to seach for your business activity and adapt your website accordingly

- As an interest group/profession, we probably need to adopt a different description of what we do if we wish to maintain visibility and influence. Suggestions anyone? Discuss!

Sorry for such a long post!

Richard

Data visualisation – the many possibilities

I am always interested in learning of different ways to represent data visually.

Well here is something that will fascinate you if you are also interested in the many possibilites of displaying data.

Visual-literacy.org have produced a fantastic “Period Table of Visualization Methods” (reduced version shown above). Being inspired from the standard chemistry period table, they have listed virtually every possible type of data visualization and categorised them. The only type missing I see is the “word cloud”.

Traditional surveys – how reliable are they?

Here is an interesting article from the Economist about political polling in the US. The article discusses the increasing difficulties in conducting polls or surveys that assess voting intentions in the US.

Most polling companies, in the US and elsewhere, conduct their surveys by calling phone landlines (fixed lines). But less and less people are using landlines – the article states that some 25% of US residents only have a mobile phone these days. Polling companies often don’t call mobile phones for various reasons, mostly related to cost. So the conclusion is, be careful when looking at survey results based on this traditional approach.

Interestingly, the article did not mention the growth of surveying using the Internet – or the possibility to survey using smart phones.

This article from FiveThirtyEight blog provides more insight into the issue – mentions the growth of Internet polling and is not so pessimistic about the future of traditional surveys.

For evaluation, the debate is interesting as often we use surveying as a tool – and many of the points discussed are relevant to the surveying undertaken for large-scale evaluations.

The theory of change explained…

Using the “theory of change” in evaluation has proven for me to be very useful – it basically maps out from activities to impact how the given intervention would bring about change.

So for those interested to know more, Organizational Research have produced a 2 page guide (pdf) to the theory of change – all you needed to know – and in brief!

Help wanted -5-point Likert or 10-point numerical?

Here’s one for you our readers.

Benchpoint is currently designing a survey for a client. Most of the questions have 5-point Likert scales:

Very satisfied

Satisfied

Neither satisfied nor dissatisfied

Slightly dissatisfied

Very dissatisfied

However the client wishes to have one question with a 10 point numerical scale where 9 is extremely satisfied and 0 is extremely dissatisfied.

We say we should stick to the same scale throughout the survey, and that a 5-point descriptive scale is better that a 10-point numerical scale.

What do our readers think?

Workshop on communications evaluation

I recently conducted a one day training workshop for the staff of Gellis Communications on communications evaluation. We looked at several aspects including:

- How to evaluate communication programmes, products and campaigns;

- How to use the “theory of change” concept;

- Methods specific to communication evaluation including expert reviews, network mapping and tracking mechanisms;

- Options for reporting evaluation findings;

- Case studies and examples on all of the above.

Gellis Communications and myself are happy to share the presentation slides used during the workshop – just see below (these were combined with practical exercises – write to me if you would like copies)

Evaluating online communication tools

Online tools, such as corporate websites, members’ directories or portals increasingly play an important role in communications’ strategies. And of course, they are increasingly important to evaluate.

I just concluded an evaluation of an online tool, created to facilitate the exchange of information amongst a specific community. The tool in question, the Central Register of Disaster Management Capacities is managed by the United Nations Office for the Coordination of Humanitarian Affairs.

The evaluation methodology that I used for evaluating this online tool is interesting as it combines:

- Content analysis

- Network mapping

- Online survey

- Interviews

- Expert review

- Web metrics

And for once, you can dig into the methodology and findings as the evaluation report is available publicly: View the full report here (pdf) >>

Likert Scale & surveys – more discussion..

I’m currently in Brussels for some evaluation training with Gellis Communications and in our discussions the use of Likert Scale in surveys. As I’ve written about before, the Likert scale (named after its creator pictured above) is widely used response scale in surveys. My earlier post spoke about the importance of labelling points on the scales and not to use too many points (most people can’t place their opinion on a scale of more than seven). Here are several other issues that have come up recently:

To use an even or odd scale: there is an ongoing debate on the Likert scale as to whether you should use an odd (five point for example) or even (four point for example). Some advocate and odd scale where respondents can have a “neutral” middle point whereas others prefer to “force” people to select a negative or positive position with an even scale (e.g four points). In addition, the use of a “don’t know” option is inconclusive. I personally believe that a “don’t know” option is essential on some scales where people may simply not have an opinion. However, studies are inconclusive if such an option increases accuracy of responses.

Left to right or right to left: I always advocate displaying scales from the negative to the positive, left to right. It seems more logic to me and some automated survey software mark your answers and calculate the responses for graphs on this basis, e.g. that the first point is the lowest. But I’ve had heard others argue that it should be the opposite way around – put positive to negative, left to right – as people will click on the first point by default in online surveys – which I personally don’t believe. I’ve not yet found any academic reference supporting either way but looking at all examples in academic articles, 95% are written as negative to positive, left to right – some evidence in itself!

Mr Likert you have a lot to answer for!

Weighted checklists as an evaluation tool

As I’ve written about previously, checklist are an often overlooked and underrated tool useful for evaluation projects.

In this post, Rick Davies explains about the “weighted checklist” and how they can be used in evaluation. By being “weighted” it means that each item or attribute of a checklist is given an importance – more, same or less compared to other items – and it all tallies up in the overall assessment (see an example here).